Dr. Chatbot Will See You Now

The AI revolution has arrived – in case you haven’t heard – and its benefits and drawbacks are the subject of speculation, especially in the world of medicine. At Schulich Medicine & Dentistry, many world-leading experts and scientists have been using AI for years. While most of them are optimistic about the role AI will play in revolutionizing health care, their positive outlooks are tempered with concerns.

“AI in medicine is fascinating and comes with a long history of possibilities. The question has always been, ‘how will AI work within the three areas of research, patient experience and medical expertise?’”

—Luke Stark, PhD

Luke Stark, PhD, an assistant professor in the Faculty of Information & Media Studies (FIMS) at Western, knows AI. As a New York Times-quoted author and researcher, he’s an expert in the ethical, historical, and social impacts of AI systems and how technologies, such as ChatGPT, will affect society. He’s also doing preliminary work with Schulich Medicine & Dentistry’s curriculum committee to prepare for AI entering the classroom.

“AI in medicine is fascinating and comes with a long history of possibilities,” said Stark. “The question has always been, ‘how will AI work within the three areas of research, patient experience and medical expertise?’”

Stark believes the answers are a moving target because AI’s medical future is still unknown.

“If saving time is a priority for, say, a family doctor, AI will probably create more volume, which means more patients – not more time,” said Stark. “At the end of the day, time saving, and volume increases (attributed to AI) will not equal increased empathy and patient care. It’s not difficult to see where this might lead.” Down a path to a two-tiered system where the marginalized get off-loaded to an AI chatbot while the privileged maintain access to human doctors? Maybe, said Stark.

“Automation is often associated with progress, and it often makes promises of creating more time. But time for whom?” warned Stark. “Traditionally, the privileged benefit the most from technology while the working class are the most negatively affected, and health care is no different.”

The big questions

Four Schulich Medicine doctors and researchers are trying to understand how AI fits into their practice, the healthcare system, and the world at large.

“Medical education must continue to emphasize humanity. Failing to recognize this challenge will mean that AI will define humanity rather than the other way around.”

—Dr. Wael Haddara

Dr. Wael Haddara

A scarecrow or a beacon?

When Haddara, Chair of Critical Care Medicine, is on rounds in the ICU, he has often pondered big questions: Can a robotic physician powered by artificial intelligence offer more knowledge and empathy than actual physicians when responding to patient questions? What happens when AI models default to systemically racist, biased or discriminatory conclusions?

“Artificial Intelligence holds much promise and also considerable fear and anxiety,” said Haddara. “And while AI presents us with new challenges, I believe this juxtaposition of hope and fear has been an integral feature of the human condition since the advent of modernity several centuries ago.”

Haddara has serious concerns about the pace at which AI is being embraced in medicine.

“Developments are so fast paced that the present state of being has been labelled ‘liquid modernity,’” said Haddara. “But while the world has changed, and human beings have struggled, the essence of that struggle remains the same. Technology can either be a scarecrow or a beacon. We can use and implement technology uncritically or we can examine the meaning of our humanity in light of these disruptive and radical technologies.” Haddara believes the main challenge for the medical profession is to use AI to protect and advance the essence of medicine, which means recognizing the essential dignity of all human beings.

“Medical education must continue to emphasize humanity,” said Haddara. “Failing to recognize this challenge will mean that AI will define humanity rather than the other way around.”

“Think of generative AI as a counterpoint to the limits of our imaginations. There’s huge potential here for AI to expand the breadth of medical research questions, to disrupt the shape of our assumptions, and to brainstorm.”

—Lorelei Lingard

Lorelei Lingard, PhD

An Rx for clear communication?

One of Lingard’s many interests is exploring the socializing power of language where medical learners develop their ability to ‘talk the talk’ of medicine. These days, she is exploring ChatGPT and how it can influence, improve and mediate research writing. As a world leader in health communication, Lingard sees enormous potential in an AI-mediated world.

“For me,” said Lingard, professor in Medicine and senior scientist in the Centre for Education Research & Innovation, “the problem with scientific writing is that it can be boring and full of jargon.” Will AI alleviate these issues?

“Think of generative AI as a counterpoint to the limits of our imaginations,” said Lingard. “There’s huge potential for AI to expand the breadth of medical research questions, to disrupt the shape of our assumptions and to brainstorm.”

As experts in their fields, doctors and researchers will be able to identify when AI is wrong and when it’s not, said Lingard, pointing out the danger for undergrads who are not experts using AI without confirming the information they are receiving.

“I can easily envision a world where we will teach students incremental ChatGPT prompting techniques.”

“The mark of an excellent doctor is not the memorization of knowledge. It’s empathetic knowledge... When encyclopedic knowledge is no longer advantaged because of AI, empathy... can reign supreme.”

—Dr. Robert Arntfield

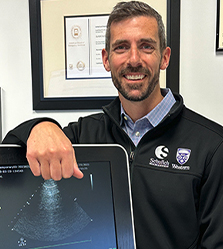

Dr. Robert Arntfield

EQ or IQ?

Dr. Arntfield uses AI to recognize patterns in lung ultrasound images, mimicking the interpretation of a trained clinician. Through a project called Deep Breathe, Arntfield and his team are creating a data-driven AI ecosystem where portability and computer vision can unlock new possibilities for those treating or suffering from respiratory problems.

“Four or five years ago I began expecting a revolution in AI in medicine,” said the associate professor in Critical Care Medicine. “With an AI-driven ultrasound, for example, it will be a lot like a stethoscope 3.0. We need doctors with front-of-mind knowledge, but the mark of an excellent doctor is not the memorization of knowledge,” says Arntfield. “It’s empathetic knowledge, emotional intelligence that matter most. When encyclopedic knowledge is no longer advantaged because of AI, empathy, and emotional intelligence (EQ) can reign supreme.”

Arntfield is an optimist when it comes to generative AI, but he is aware of the potential dangers, and said a full embrace of AI should come with formal regulations.

“It will take both the optimistic and apocalyptic thinkers to fully understand the implications of AI,” said Arntfield. “Some say we’ve failed to fully predict the dangers of the internet, and that there’s always a price to pay for progress.”

“Where old-school research methods can’t identify complex relationships in a broad-spectrum test,” said Patel, “AI is able to pick up non-linear trends and patterns that would normally be very challenging and time-consuming to figure out."

—Maitray Patel

Maitray Patel, MD/PhD candidate

An end to critical thinking?

Patel has been on the AI bandwagon for more than four years, first studying concussions. These days, machine learning is helping Patel identify key markers in broad-spectrum blood work to help classify long COVID in patients. Without AI, Patel said, researchers would miss patterns and intricacies between molecules.

“Where old-school research methods can’t identify complex relationships in a broad-spectrum test,” said Patel, “AI is able to pick up non-linear trends and patterns that would normally be very challenging and time-consuming to figure out." At the same time, Patel worries that generative AI will prevent people from critical thinking, allowing false narratives to emerge that could be damaging. However, in the right hands, the sky for AI is the limit.

“ChatGPT can provide a model for my literature review. It can help me with my research question by opening a door to broader ideas I may not have considered,” said Patel. “It’s basically a good sounding board that helps me take a step back and reconsider my original questions.”